Getting Started with Automated Test Selection - General guide

Step-by-step guide to integrating Automated Test Selection

This guide will lead you through the necessary steps of integrating Automated Test Selection into your Python repo. By the end of the guide, you will have integrated Automated Test Selection in your CI/CD or local environment.

Prerequisites

- A Python project that uses the pytest library to run tests

- This project needs to be using Codecov already. If it isn't you can setup codecov following this guide

Not ready to use Codecov on your own repositories?

Try it out for yourself with the Codecov tutorials for GitHub, Bitbucket, or Gitlab to see what Codecov has to offer.

Getting Started

This guide assumes you are setting Automated Test Selection in a local environment. The commands should translate to other CI providers easily, but you can also check full examples for:

This is a simple guide that doesn't cover many of the concepts and configuration options of Automated Test Selection. Check the Further Reading to learn more.

This guide has 2 parts

Part 1 will integrate static analysis to your CI. Part 2 will integrate label analysis. You need to complete both in order for a successful Automated Test Selection integration.

Part 1

Step 1. Get the authentication tokens

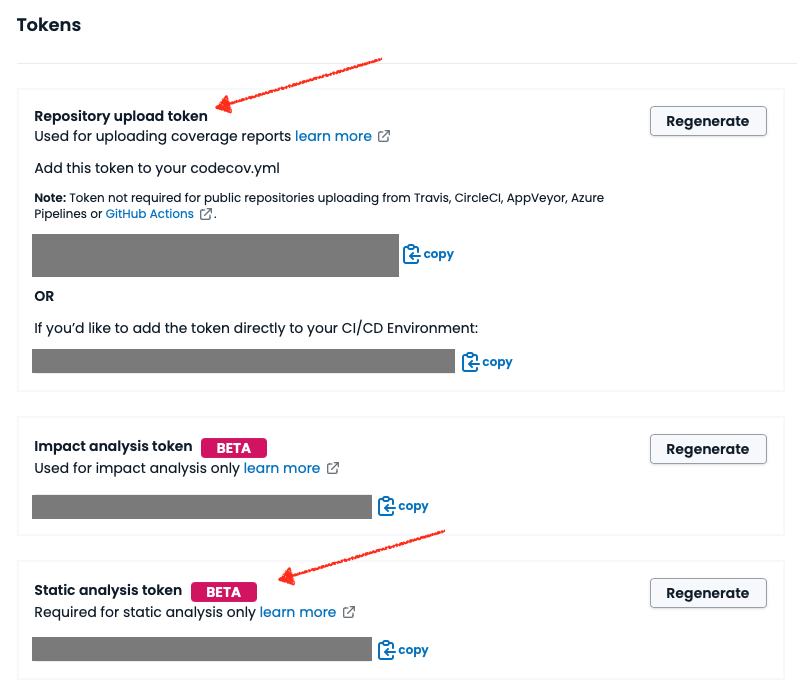

You will need both the Repository upload token (aka CODECOV_TOKEN) and Static analysis token (aka CODECOV_STATIC_TOKEN). You can get it on the Settings page of your repo.

Copy these codes for later. They will be used to authenticate actions with the Codecov CLI.

Step 2. Configure the codecov.yml for Automated Test Selection

codecov.yml for Automated Test SelectionTo use Automated Test Selection you need a flag with the new Carryforward mode "labels". You can reuse any existing flag if you have one already. Read more about Carryforward Flags Here we are creating a "smart-tests" flag with carryforward_mode: "labels".

We're also adding some configuration for the CLI that will be used when uploading the coverage data to Codecov.

flag_management:

individual_flags:

- name: smart-tests

carryforward: true

carryforward_mode: "labels"

statuses:

- type: "project"

- type: "patch"

cli:

plugins:

pycoverage:

report_type: "json"

Step 3. Download the Codecov CLI

This will be the interface that triggers the commands used by Automated Test Selection. It is available via Pypi to download. This is the easiest way to download it, but other options are in the Codecov CLI guide here.

pip install codecov-cli

Step 3.5. [Optional] Set your environment variables

The tokens you just copied will be used to authenticate several actions using the Codecov CLI. While you can pass them explicitly to each command it is more convenient to export them as environment variables and the CLI will detect them automatically.

export CODECOV_TOKEN=your-codecov-token

export CODECOV_STATIC_TOKEN=your-codecov-static-token

If you decide not to do this, remember that CODECOV_STATIC_TOKEN is used with the static-analysis (step 5) and label-analysis (step 7) commands only. All other commands should use the CODECOV_TOKEN.

Step 4. Create commit information

This lets Codecov knows about the existence of the commit for which you will be uploading information later. It uses the create-commit command of the CLI.

codecovcli create-commit

Step 5. Upload static analysis information

This steps runs the static analysis in your code and uploads it to Codecov. It uses the static-analysis CLI command.

The 1st run can take a while

When running

static-analysisfor the 1st time there's a lot of information that needs to be uploaded to Codecov, so it can take a few minutes. This is normal. In subsequent runs this step should only take a few seconds.

There are options to speed up static-analysis by restricting the files that will be analyzed. For example if you have a mono repo with many different apps and will only uses Automated Test Selection in one app you can analyze only the files for that app and its tests. Read more about how to do that Static Analysis. The example command below will analyze all files in your project.

codecovcli static-analysis

Step 6. Stop and create a new commit

You've made great progress so far! Have a cookie ![]() , we're almost done.

, we're almost done.

Because of the way Automated Test Selection works - it is a comparison between 2 commits - we need more than one commit with static analysis. From running the previous command you just uploaded static analysis information for the current commit. Now we need another one to continue.

Unfortunately this means you'll have to repeat steps 4 and 5 in another commit. For example the parent of the current commit.

git checkout HEAD^

codecovcli create-commit

codecovcli static-analysis

git switch -

Following the commands above will send static analysis data for the parent of the current commit and return git to the current commit. In this scenario HEAD^ will be the BASE of our comparison and HEAD will the the... well HEAD of the comparison ![]()

End of part 1 ![]()

Part 2

Depends on Part 1

You should only follow the steps below if you successfully completed the Part 1 of the guide.

Step 7 : Produce list of tests to run

We can make label-analysis produce the list of tests to run (and the options needed to run them so that Automated Test Selection works) that you can subsequently pass to pytest to execute. This allows you to effectively preserve your test execution architecture in your CI but does require some additional configuration, as detailed below.

We do that using the --dry-run option. This will output JSON to stdout in the format shown below. runner_options is the list of arguments you need to pass to your testing tool (e.g. pytest). ats_tests_to_run is the list of tests that Automated Test Selection has decided need to be run. ats_tests_to_skip is the list of tests that you can safely skip.

{

"runner_options": ["--cov-context=test"],

"ats_tests_to_run": [

"test_1",

"test_2"

],

"ats_tests_to_skip": [

"test_3",

"test_4",

"test_5"

}

Alternatively you can use the --dry-run-format=space-separated-list to produce output as a space separated list of arguments. This also goes to stdout as shown below. Output is in 2 lines. The first is prefixed with TESTS_TO_RUN= and contains the list of tests to run and options to be passed to the testing tool. The second line is prefixed with TESTS_TO_SKIP= and contains the list of tests that are safe to skip.

Notice that the test names are wrapped in quotes (' ) so the shell doesn't miss-interpret test names that include spaces or other especial characters.

TESTS_TO_RUN='--cov-context=test' 'test_1' 'test_2'

TESTS_TO_SKIP='--cov-context=test' 'test_3' 'test_4' 'test_5'

Why this --cov-context=test ?

This is the option you need to pass to pytest so it annotates the test labels in the coverage report. This is specific to pytest, but expect all testing tools to require some option to be added. It is absolutely important to run pytest with that option, or Automated Test SelectionTS can't work.

If you make changes to the list (e.g. split it to run multiple processes) remember to apply that option to all copies.

Please note, if you're already set up with some parallelization, we recommend you do not remove parallelization while setting up Automated Test Selection. If you remove parallel test running, then some test runs may take significantly longer if Automated Test Selection chooses to run all tests (which can happen depending on the code change)

Step 7a (alternative). Run Label Analysis and execute tests

Alternatively, you can instruct the CLI to run the list of selected tests. In this step we will run label-analysis command in the CLI. This does 3 things:

- Collects the test labels from your test suite;

- Uploads them to Codecov with the commit to be compare against HEAD and waits for a response;

- Executes the tests in the set sent from Codecov (this is optional)

Adding options to running tests

You probably run your tests with some options. To learn how to configure pytest options and take full control of the label-analysis process check Runners. However, if you're looking to preserve your existing test execution architecture as much as possible, we recommend Step 7 above

codecovcli label-analysis --base-sha=$(git rev-parse HEAD^)

The end result of this step should be a coverage report with the test results ![]()

Step 8. Upload to Codecov

The final step is uploading coverage information that was generated to Codecov. This information needs to be generated with the test context information (the labels), otherwise Automated Test Selection will not work. We also need to use the label configured in step 2 (smart-tests)

Currently only json format is supported for Automated Test Selection. The easiest way to do that is to use the pycoverage plugin on the CLI. The compress-pycoverage plugin is optional but recommended, as it can greatly reduce the size of the report that will be uploaded to Codecov.

codecovcli create-report

codecovcli --codecov-yml-path=codecov.yml do-upload --plugin pycoverage --plugin compress-pycoverage --flag smart-tests

And that's it! Thanks for sticking around ![]()

Wait... how do I know if it's working?

To check that it's really working, you’ll need to see in the logs of the CLI. Look for the summary of informations about tests to run. On the first run with label analysis expect to see that line have only absent_labels information. This is because Codecov has no coverage info for any tests in your test suite yet.

info - 2023-05-02 17:11:55,620 -- Received information about tests to run --- {"absent_labels": 47, "present_diff_labels": 0, "global_level_labels": 0, "present_report_labels": 0}

Over time, we expect to see more labels in the present_report_labels, so that only new tests are in absent_labels, and tests that are affected by your recent changes are in present_diff_labels. Something similar to the example below.

info - 2023-05-02 17:11:55,620 -- Received information about tests to run --- {"absent_labels": 3, "present_diff_labels": 5, "global_level_labels": 0, "present_report_labels": 47}

Further Reading

- Overview of Automated Test Selection - Automated Test Selection

- Troubleshooting Automated Test Selection integration - 🔥Troubleshooting

- Overview of the Codecov CLI - The Codecov CLI

- Details on Static Analysis - Static Analysis

- Details on Label Analysis - Label Analysis

Updated 6 months ago